Could AI help digest papers? There are still technical and legal obstacles

The Allen Institute takes a different approach: it negotiates deals with more than 50 publishers to allow its developers to data mine the full text of paid papers. Weld said that almost all publishers are offering access for free because AI brings them traffic. Even so, Semantic Reader users will only be able to access 8 million of Semantic Scholar's 60 million full-text papers due to licensing restrictions.

Achieving large-scale data mining also requires getting more authors and publishers to adopt non-PDF formats to help machines digest the content of papers efficiently. A White House directive from 2022 requires documents produced with federal funds to be machine-readable, but agencies have yet to come up with details.

Despite the challenges, computer scientists are already looking to develop more sophisticated AI to glean richer information from the literature. They hope to gather clues to enhance drug discovery and constantly update systematic reviews. For example, Research supported by the Defense Advanced Research Projects Agency explores a system that can automatically generate scientific hypotheses.

For now, scientists using AI tools need to maintain a healthy dose of skepticism, says Hamed Zamani, a researcher on interactive information access systems at the University of Massachusetts Amherst: "The LLM will definitely get better. But right now, they have a lot of limitations. They provide the wrong information. Scientists should be very aware of this and carefully examine their output."

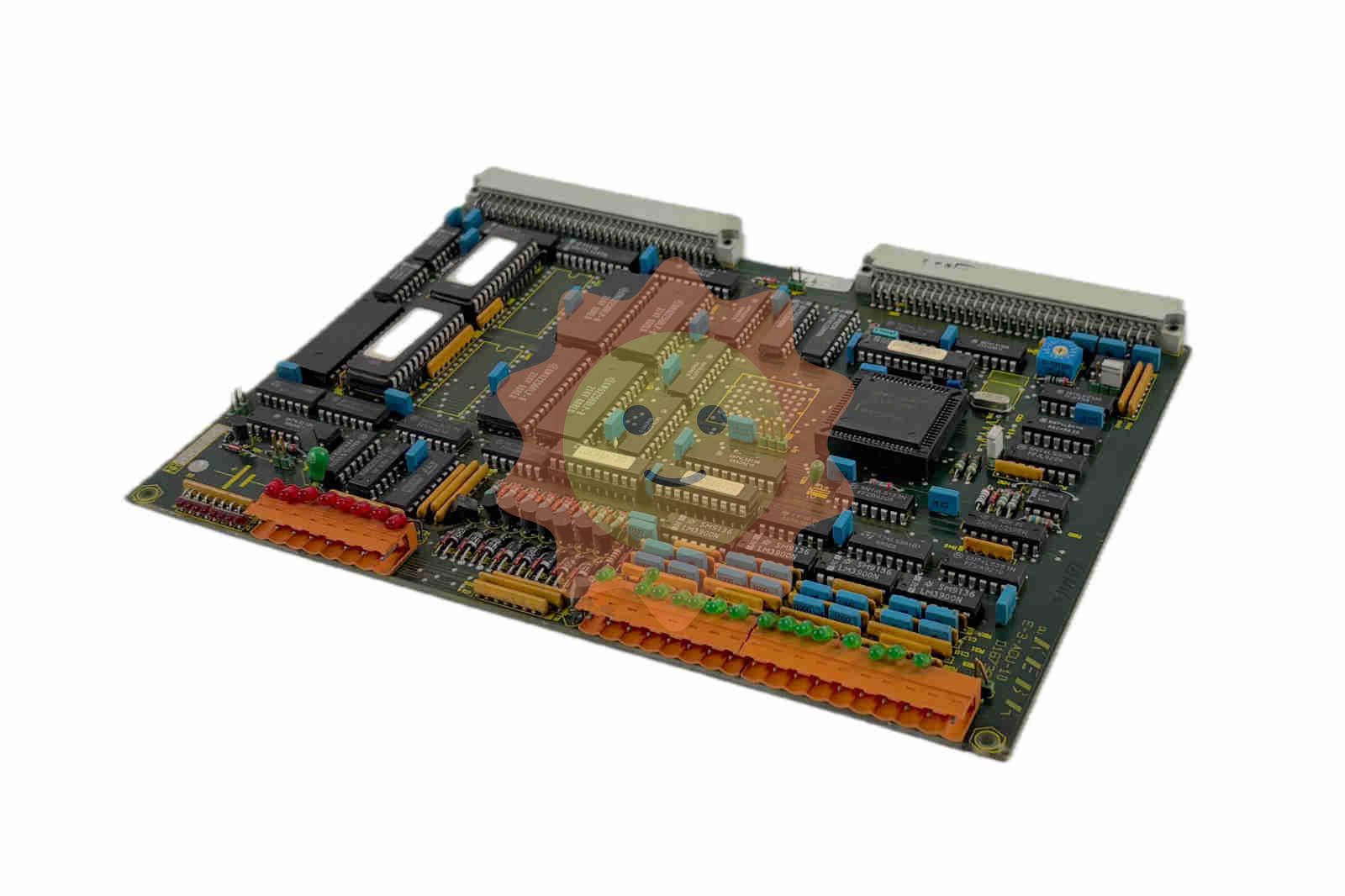

- ABB

- General Electric

- EMERSON

- Honeywell

- HIMA

- ALSTOM

- Rolls-Royce

- MOTOROLA

- Rockwell

- Siemens

- Woodward

- YOKOGAWA

- FOXBORO

- KOLLMORGEN

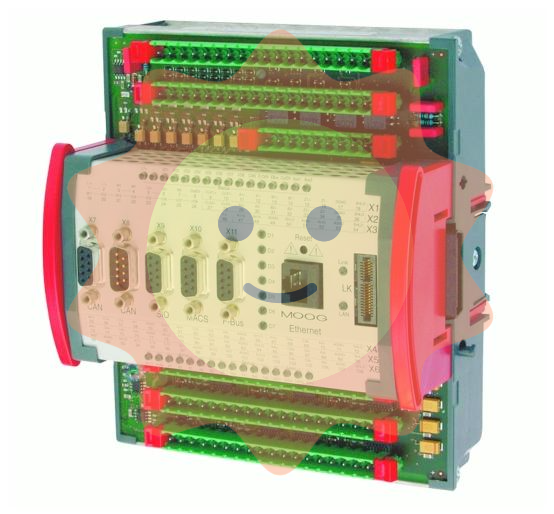

- MOOG

- KB

- YAMAHA

- BENDER

- TEKTRONIX

- Westinghouse

- AMAT

- AB

- XYCOM

- Yaskawa

- B&R

- Schneider

- Kongsberg

- NI

- WATLOW

- ProSoft

- SEW

- ADVANCED

- Reliance

- TRICONEX

- METSO

- MAN

- Advantest

- STUDER

- KONGSBERG

- DANAHER MOTION

- Bently

- Galil

- EATON

- MOLEX

- DEIF

- B&W

- ZYGO

- Aerotech

- DANFOSS

- Beijer

- Moxa

- Rexroth

- Johnson

- WAGO

- TOSHIBA

- BMCM

- SMC

- HITACHI

- HIRSCHMANN

- Application field

- XP POWER

- CTI

- TRICON

- STOBER

- Thinklogical

- Horner Automation

- Meggitt

- Fanuc

- Baldor

- SHINKAWA

- Other Brands