Tektronix 3 Series Hybrid Domain Oscilloscope

Switch the vertical gear to 100mV/div, repeat the measurement, and the impedance still needs to be between 990k Ω and 1.01M Ω;

Switch the termination impedance of the instrument to 50 Ω, set the output impedance of the voltage source to 50 Ω, repeat the test, and the impedance should be between 49.5 Ω and 50.5 Ω;

Repeat the above steps for all channels (MDO32 tests 1-2 channels, MDO34 tests 1-4 channels).

② DC balance test (verifying zero input offset)

Test objective: To measure the "offset of the signal at zero input", as excessive offset can lead to measurement errors in DC small signals.

Testing process:

Connect channel 1 to a 50 Ω BNC direct terminal (no signal input), press "Default Setup";

Channel 1 setting: Vertical gear 1mV/div, termination impedance 50 Ω, bandwidth limit 20MHz, acquisition mode "Average (16 waveform)";

Touch "Measure" → "Amplitude" → "Mean", add mean measurement, and record mean voltage;

Convert the average voltage to "div" (div=voltage/vertical gear), which should be within the range of -0.5div -+0.5div;

Switch the vertical gear to 2mV/div (allowed -0.25div -+0.25div), 10mV/div (allowed -0.2div -+0.25div), and repeat the measurement;

Switch bandwidth limit to 250MHz, Full, terminate impedance to 1M Ω, repeat test;

Repeat the above steps for all channels.

③ Simulated bandwidth test (verifying frequency response)

Test objective: To confirm whether the "-3dB bandwidth" of the instrument meets the standard at different vertical gears, as insufficient bandwidth can cause high-speed signal distortion.

Testing process (using 1GHz model as an example):

Connect the level sine wave generator to channel 1, with an output impedance of 50 Ω and a termination impedance of 50 Ω for instrument channel 1;

Instrument settings: vertical gear 10mV/div, acquisition mode "Sample", horizontal gear 4ns/div;

The generator outputs a 10MHz, 8divpp signal (i.e. 80mVpp), and records the "Vin pp" (peak to peak measurement value);

Adjust the generator frequency to "1GHz" (the maximum bandwidth frequency corresponding to 10mV/div) and record "Vbw pp";

Calculate the gain as Vbw-pp/Vin pp, which should be ≥ 0.707 (-3dB point);

Switch the vertical gear to 5mV/div (maximum bandwidth frequency 500MHz), 2mV/div (350MHz), 1mV/div (150MHz), and repeat the test;

Repeat the above steps for all channels.

④ DC gain accuracy test (to verify the accuracy of DC signal amplification)

Test purpose: To confirm whether the instrument's "amplification ratio" for DC signals is accurate, as excessive gain error can lead to inaccurate voltage measurement.

Testing process:

Connect the DC voltage source to channel 1, with an output impedance of 1M Ω for the voltage source, a termination impedance of 1M Ω for instrument channel 1, and a bandwidth limit of 20MHz;

Instrument settings: Vertical gear 1mV/div, acquisition mode "Average (16 waveform)", trigger source "AC Line";

Voltage source output -3.5mV (Vnegative), record the mean measurement value "Vnegative measured";

Voltage source output+3.5mV (Vpositive), record the mean measurement value "Vpositive measured";

Calculate Vdiff=| Vnegative measured - Vpositive measured |, expected Vdiff=7mV (1mV/div x 7div);

Calculate gain accuracy=(Vdiff-7mV)/7mV) × 100%, within the range of -2.5% -+2.5%;

Switch the vertical gear to 2mV/div (allowed -2.0% -+2.0%), 5mV/div (allowed -1.5% -+1.5%), and repeat the test;

Repeat the above steps for all channels.

(3) AFG testing (verifying signal source accuracy)

① AFG frequency accuracy test

Testing process:

Connect the AFG output to the frequency counter, press "Default Setup" on the instrument, and touch "AFG";

AFG setting: waveform "sine wave", amplitude 2.5Vpp, load impedance 50 Ω, frequency 10kHz;

Read the frequency counter value within the range of 9.9987kHz-10.0013kHz (± 130ppm);

Switch the frequency to 50MHz (>10kHz, allowing ± 50ppm, i.e. 49.9975MHz-5.0025MHz) and repeat the test;

Switch the waveform to "square wave" with frequencies of 25kHz (± 130ppm allowed) and 25MHz (± 50ppm allowed), and repeat the test.

② AFG amplitude accuracy test

Testing process:

Connect AFG output to DMM, with a 50 Ω terminal in the middle string, and set "AC RMS measurement" in DMM;

AFG setting: waveform "square wave", frequency 1kHz, load impedance 50 Ω, amplitude 20mVpp;

To read the DMM value, it needs to be within the range of 9.35mV-10.65mV (± 1.5%+1mV);

Switch the amplitude to 1Vpp (allowing 490.5mV-509.5mV) and repeat the test.

(4) Spectrum analyzer testing (to verify RF analysis capability)

① Display Average Noise Level (DANL) Test

Test purpose: To measure the "inherent noise level" of the spectrometer, the lower the DANL, the better it can detect weak RF signals.

Testing process:

Connect the RF input to a 50 Ω terminal (no signal), press "Default Setup" on the instrument, and touch "RF";

RF settings: Trace "Average", detection mode "Average", reference level -15dBm, RBW Auto;

- ABB

- General Electric

- EMERSON

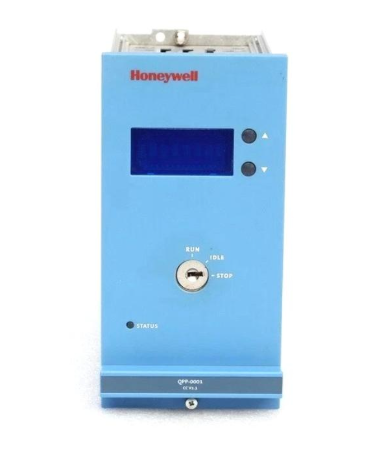

- Honeywell

- HIMA

- ALSTOM

- Rolls-Royce

- MOTOROLA

- Rockwell

- Siemens

- Woodward

- YOKOGAWA

- FOXBORO

- KOLLMORGEN

- MOOG

- KB

- YAMAHA

- BENDER

- TEKTRONIX

- Westinghouse

- AMAT

- AB

- XYCOM

- Yaskawa

- B&R

- Schneider

- Kongsberg

- NI

- WATLOW

- ProSoft

- SEW

- ADVANCED

- Reliance

- TRICONEX

- METSO

- MAN

- Advantest

- STUDER

- KONGSBERG

- DANAHER MOTION

- Bently

- Galil

- EATON

- MOLEX

- DEIF

- B&W

- ZYGO

- Aerotech

- DANFOSS

- Beijer

- Moxa

- Rexroth

- Johnson

- WAGO

- TOSHIBA

- BMCM

- SMC

- HITACHI

- HIRSCHMANN

- Application field

- XP POWER

- CTI

- TRICON

- STOBER

- Thinklogical

- Horner Automation

- Meggitt

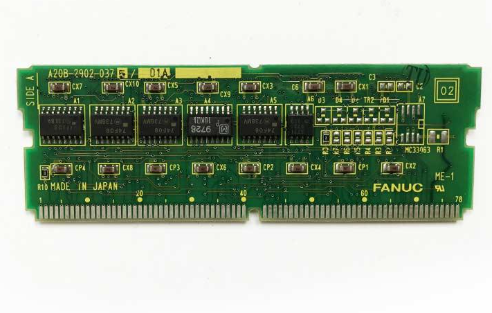

- Fanuc

- Baldor

- SHINKAWA

- Other Brands